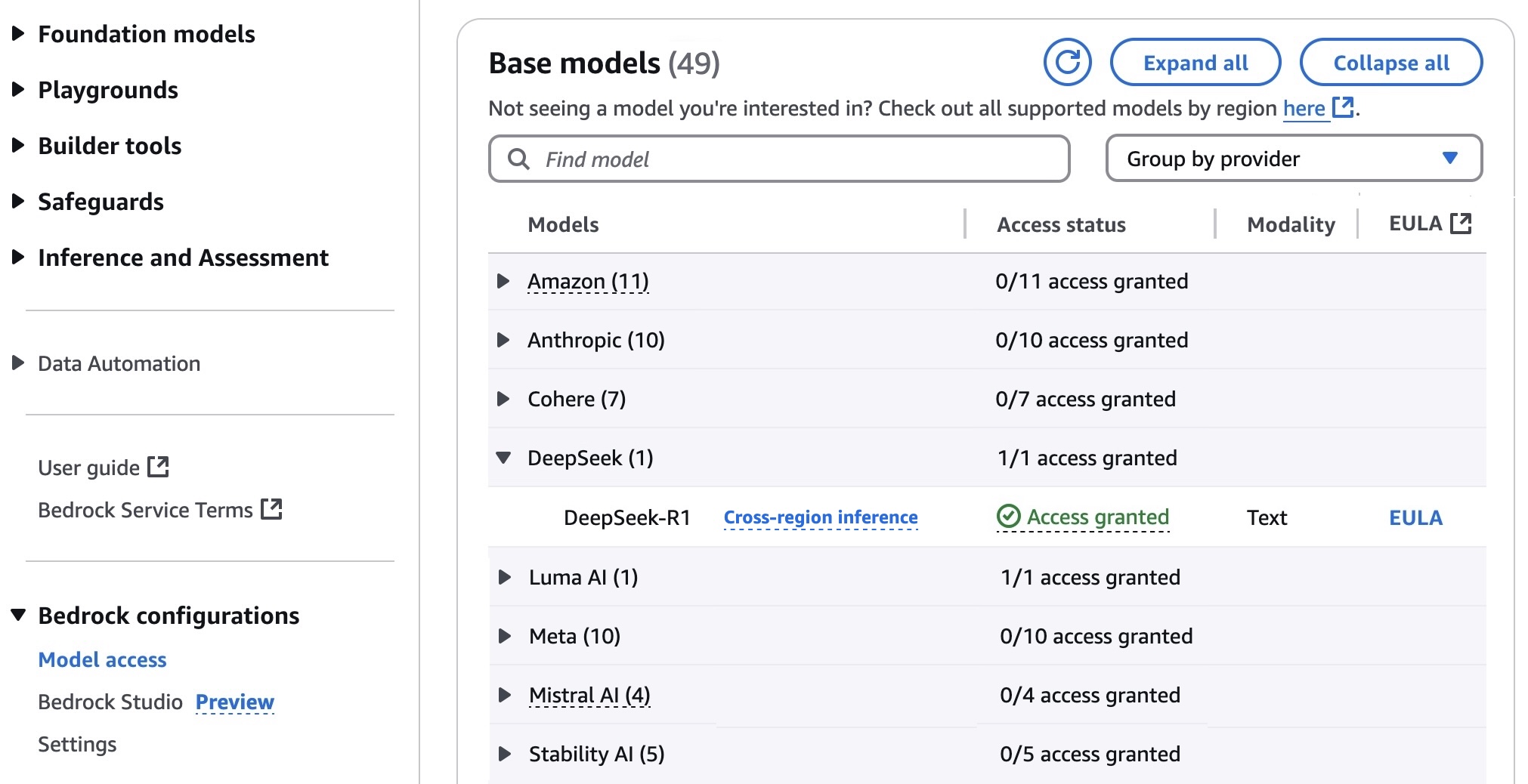

In my previous writeup, I wrote that you have to spent a lot (using GPU), or put up with very slow performance (using CPU) if you wanted to use Deepseek R1 on AWS. Not anymore. AWS now offers Deepseek R1 as a base model starting from 10 Mar 2025 (in selected regions). Check out AWS blog on the demo walkthrough.

Just take note that you may have to increase the maximum output length in order to complete your request – this applies to most reasoning models. In my test, output was abruptly stopped halfway, as the default output token length is only 4096. Extending the output length solves the problem.