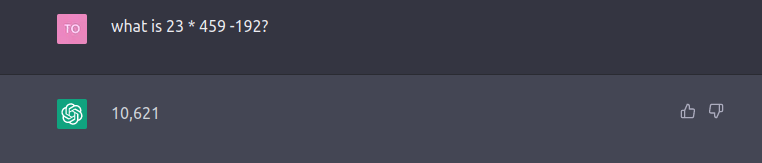

People are often amused or surprised when ChatGPT fails to give a correct response for seemingly simple questions (eg. multiply two numbers), yet is able to answer very complex ones.

The way to think about ChatGPT and other LLM tools is that they are simply an assistant and not an oracle.

AI tools like ChatGPT have a mental model of the world, and try to imagine what would be the best answer for any given prompt. But they may not get it right all the time, and in times when they don’t have an answer they will try their best anyway (ie. fabricate one).

An assistant make mistakes, that’s why you should expect ChatGPT’s output to have mistakes.

That said, ChatGPT is really good in areas that don’t require precision (eg. creative writing).

Update (2023-02-01): ChatGPT has released a newer version that is supposed to have improved factuality and mathematical capabilities. Well, didn’t work for me.