This is a relatively new attack technique that specifically targets developers. It typically begins under the guise of a technical interview, where candidates are asked to review a codebase by cloning a Git repository. Unknown to the victim, the repository is malicious.

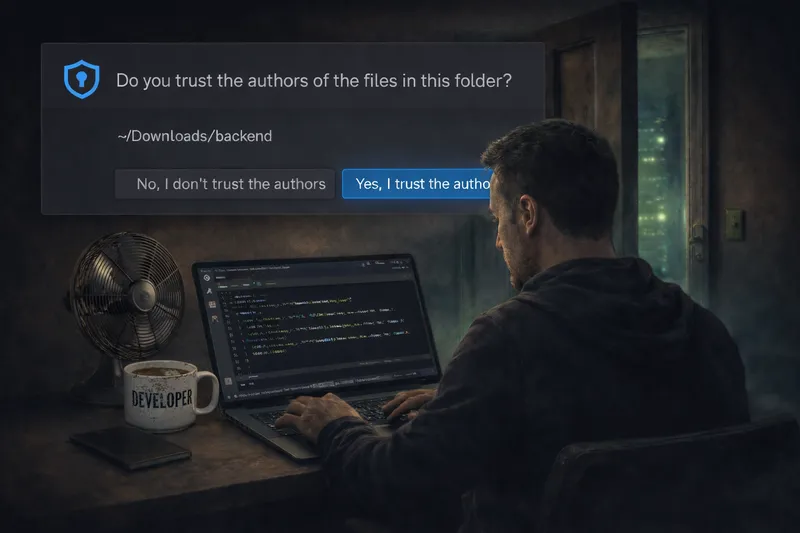

The attack leverages a lesser-known feature of Visual Studio Code called Tasks. When a developer opens the cloned project and trusts the workspace, VS Code can automatically interpret and execute configurations defined in tasks.json. This behavior allows a backdoor or malicious command to run without the developer explicitly initiating it.

Notably, many developers – including myself – are unaware of how powerful and potentially dangerous this feature can be when abused. This makes the attack particularly effective, as it exploits implicit trust in development tools rather than traditional software vulnerabilities.

One variant of the malware deployed by this technique targets the crypto wallets on the developer’s machine.

Jamf Threat Labs uncovers North Korean hackers exploiting VS Code to deploy backdoor malware via malicious Git repositories in the Contagious Interview campaign

Source: Threat Actors Expand Abuse of Microsoft Visual Studio Code